User Acceptance Testing in Agile

In user acceptance testing in agile, the trems below referece to the following definition:

- Validation: is the process of ensuring we are building the right system.

- Verification: is the process of checking we are building the system right.

Typical Form of Acceptance Testing

- User Acceptance Testing

- Operational (acceptance) testing

- Contract and regulation acceptance testing

- Alpha and beta (or field) testing

User Acceptance Testing: according to International Software Testing Qualifications Board (ISTQB), is a formal testing with respect to user needs, requirements, and business process conducted to determine whether a system satisfies the acceptance criteria and to enable the user, customers and or other authorized entity to determine whether to accept the system.

UAT in Agile Projects

UAT during an agile methodology is subtly different to during more traditional waterfall projects. The test cases are usually based on “user stories” or “Acceptance criteria” that are produced during each sprint. Acceptance test cases are ideally created during sprint planning or the iteration planning meeting which precedes development, so that the developers have a clear idea of what to develop and a user story is not considered complete until the acceptance tests have passed.

User stories are created by the client’s business customers and are high-level tests to test the completeness of a user story or stories 'played' during any sprint/iteration. These tests are created ideally through collaboration between business customers, business analysts, testers and developers; however, the business customers (product owners) are the primary owners of these tests.

As the user stories pass their acceptance criteria, the business owners can be sure of the fact that the developers are progressing in the right direction about how the application was envisaged to work and so it's essential that these tests include both business logic tests as well as UI validation elements (if needs to be tested).

Responsibility

UAT is always performed by the client, and the client always provides the final sign off of the UAT phase. Once UAT is complete the system is considered appropriate for deployment and can be transitioned into the client’s live environment. This usually marks the end of a project and allows the professional service engagement to transition to a close.

Review Phase

UAT is always performed on the final production system as to ensure that the testing results are truly indicative of the systems final performance and experience. As it is meant to reflect true live usage, it should include all relevant integration points with external systems to ensure that the system is fully tested before being deployed into a live environment.

Environment

On successful completion of UAT testing a test report will be created, and the test report will be signed off by the client to indicate acceptance of UAT. This document or a separate sign off document will represent acceptance of the deliverables as per the statement of work, and permission to deploy the system into the live environment.

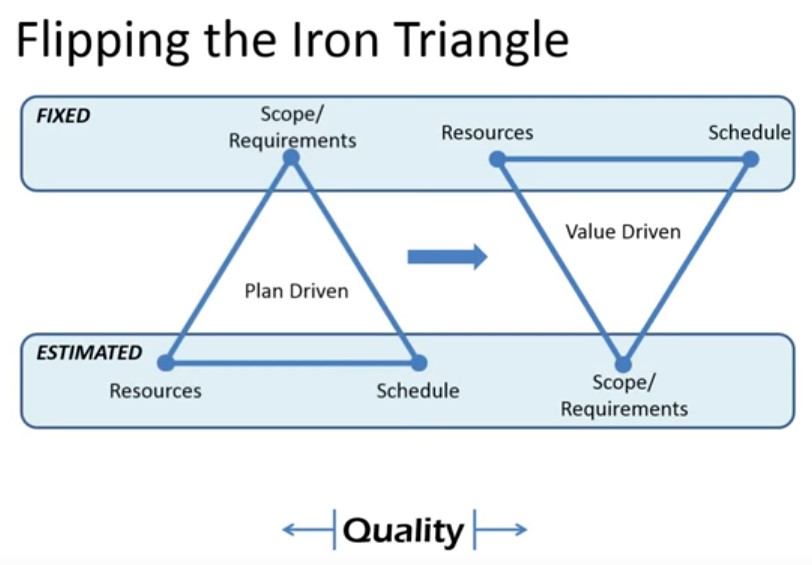

In a traditional life cycles of software development, we fix out scopes and we have a bassline set of requirements and we then develop a plan whereby we estimate the budget, the resources and a timeline that will take us to deliver all those requirements, and this is basically a Plan Driven approach. Hence, what often suffers with plan driven approach is Quality. This is mainly to deliver the requirements we often have to cut back on testing.

However, in agile there’s a total change of mindset, we fix our budget, resources and schedules typically by time boxing and by having a fixed time at which we are going to do release, hence it is very much Value-Driven approach because at any point in time we can change our focus and priorities based on what we’ll deliver the most value right now to our customers. Therefore, it is very much about embracing change by planning over a series of iterations/sprints. For example, what kind of scope we are going to deliver over a given time frame (sprint).

The key point is to preserve quality as we go ahead with the development in each sprint rather than try and test the deliverables at the end. Hence, testing becomes an important part of our development (for example, user acceptance test could be done at the end of each sprint) and that is the key difference.

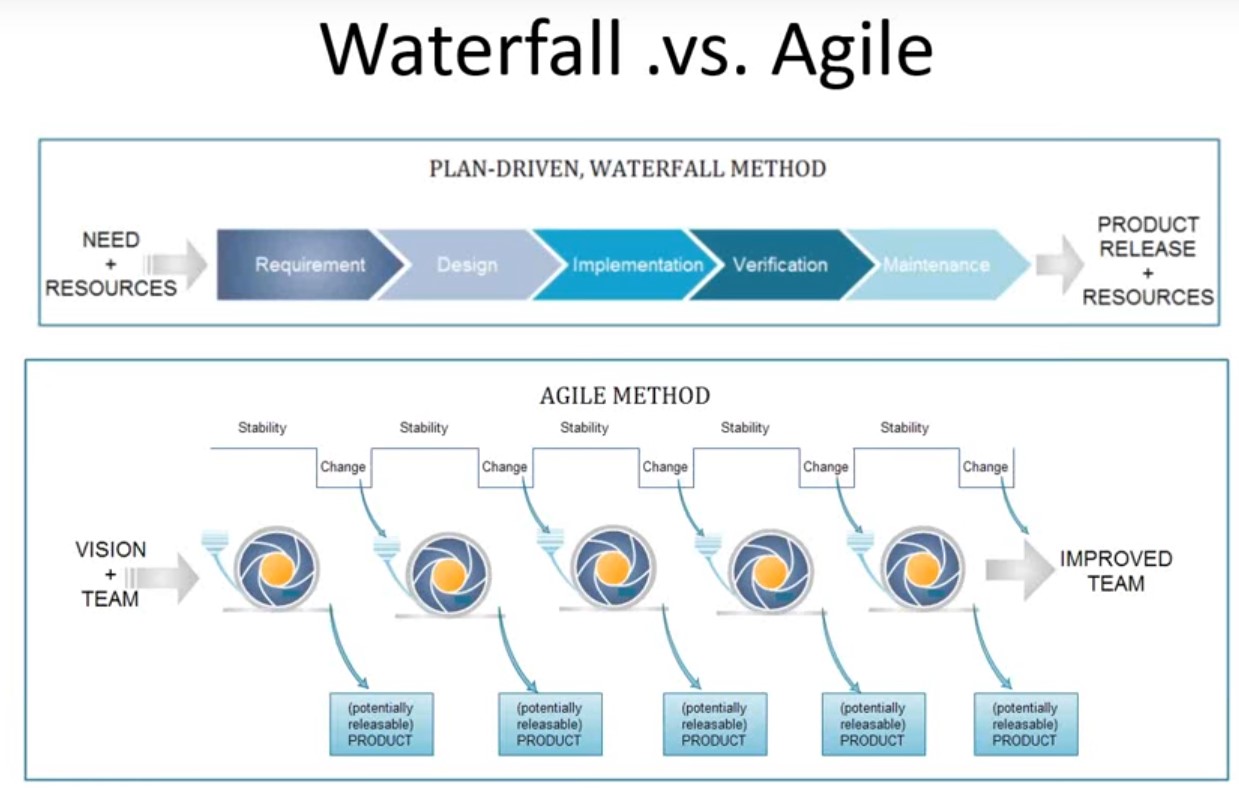

The image below displays the transition from a traditional waterfall model-based acceptance testing to an Agile based acceptance testing.

In Scrum/Agile methodology the first sprint is sometimes called Sprint Zero/Planning Phase, this is where we get our initial product backlog together. The product backlog should at least have enough stories in it to allow us to start working on the first or two sprints, but ideally should have enough scope defined not necessarily to the lowest level of granularity. We could have for example epics in there rather than stories, to allow us to do some release planning so we can see over series of Sprints roughly what scope we plan at this stage to deliver to our client and discuss that with our client. We accept that may change and based on the feedback from the client we may get different priorities coming along midway.

Similarly, our architects may have been used to defining a big architectural up front in detail but obviously as an agile we want to minimize that (we may want to have an architectural vision). We want to allow if there are multiple teams to work within common architecture design (but you want to do the minimum there to allow us to start working and delivering business value).

- What kind of risks there are?

- What kind of test we have to do?

- How we are going to define our acceptance tests as part of overall set of tests?

- We also need to start deciding where are we going to do all of our testing? Is it going to be done in each of sprint? Or is there going to be any certain tests which will be deferred to later sprints?

And then we may have this end game phase at the end, which is sometimes called Hardening Sprint before we do a release. Which will allow us to do certain activities that are difficult to do within sprints, like system integration testing, performance testing and end to end test.

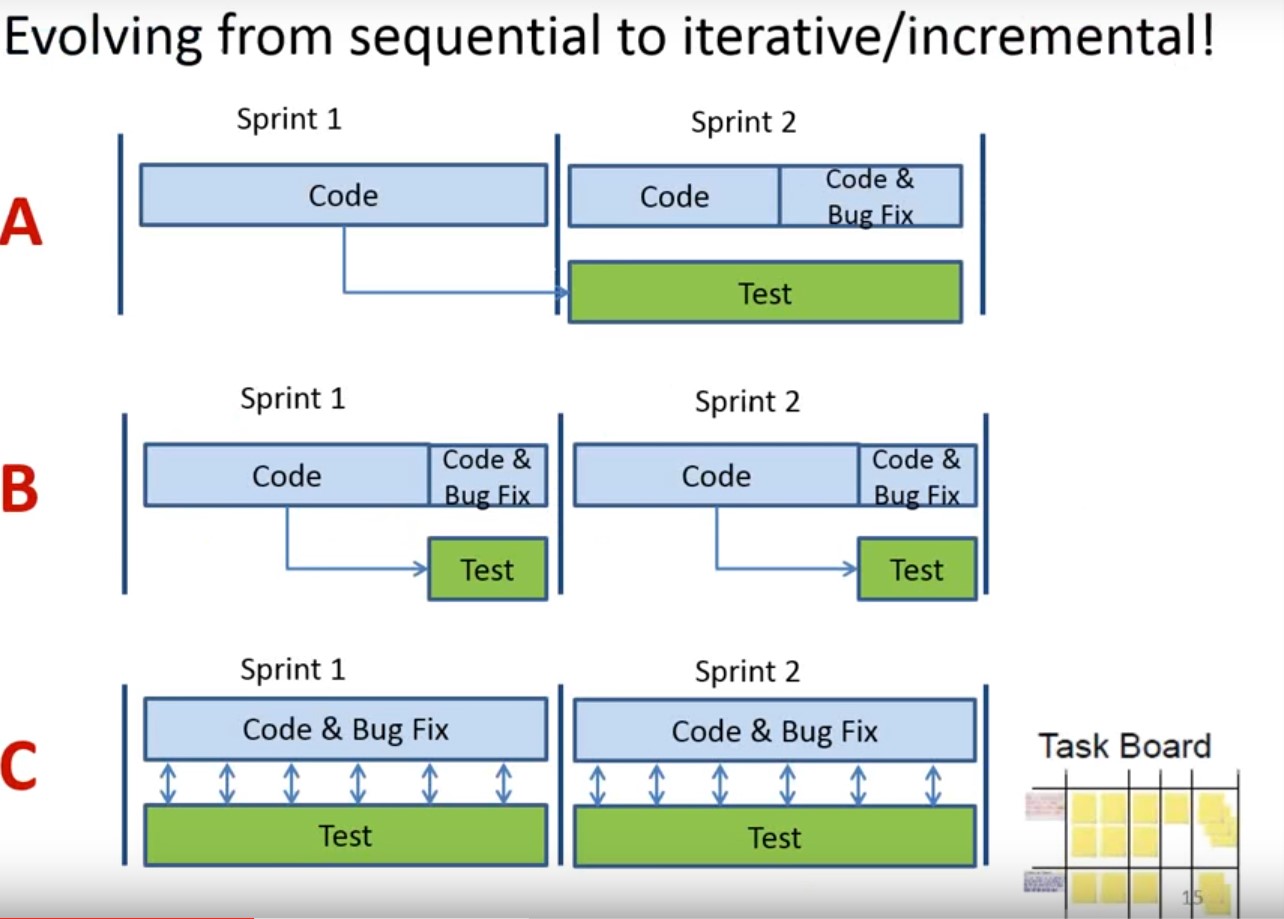

Example–A: is the worst scenario where people just haven’t got the idea that testing should be integrated with development, and it is still a separate handover type thing where test is already a sprint behind development. It is very much based on handover and sequential activities example.

Example–B: is better and at least the testing is happing within each sprint. However, it is still not full agile because testing is taking place at the end of the sprint.

Example–C: is a good example scenario of agile based testing, since testing is happing throughout a sprint.

The two-key point that helps us to achieve example-c is:

- Having user stories that are granular enough, you need to have stories that are smaller enough which enables you to allocate five to ten or even more stories to a team to complete within the sprint. That allows them to start staggering their development of these stories. Hence, you don’t start developing all your stories in parallel and they all become available the last day of the spring for testing. What you do is you stagger your development you work on two or three stories right from the start but you don’t start working on the other. So, you get them to completion and that’s depicted in the task board on the right-hand side in the figure above. There two stories and two rows and their associated tasks but obviously, you prioritize your stories and start working from the top down and you implement the code for those first and second stories, and you start testing within two days or three days rather than leaving it all to the end. And as you finish the first story of course you take the next story so you’re progressing and completing stories as you go through the sprint include all in your testing that’s associated with those stories. Therefore, granular stories at the right level of granularity is key to allowing tests to take place throughout the sprints for testing to be total integrated.

- The second key is Test-Driven development both at the unit level which is often where we talk about TDD and at the acceptance level. Right at the start the first activities that we do when we take a story and its associated acceptance criteria is start defining the acceptance test associated with that. Therefore, acceptance tests form part of the definition of the requirement. They expand on the requirement; each test case is a model of the behaviour that we require associated with that story and developers use those test cases to clarify enhance and add more detail to the understanding of the story. And those tests (the acceptance criteria of the story and the tests) come from the conversations between the team and the product owner. Hence, once we take a story and its associated acceptance criteria is trying to find the acceptance test associated with that.

An acceptance test is a formal description of the behaviour of a software product, generally expressed as an example or a usage scenario.

- In many cases the aim is that it should be possible to automate the execution of such tests by a software tool, either ad-hoc to the development ream or off the shelf.

- Similarly, to a unit test, an acceptance tests is generally understood to have a binary result, pass or fail.

- For many Agile team acceptance tests are the main form of functional specification; sometimes the only formal expression of business requirements.

- The terms “functional test”, “acceptance test” and “customer test” are used interchangeably.

- A more specific term “story test”, referring to user stories is also used, as in the phrase “story test driven development”